AVX2 depth buffer check and 32-bit to 24-bit data conversion

Contents

Introduction

I have been tinkering with my C pseudo REYES software

renderer again lately, i especially worked on some SIMD

optimizations to compose multiple render buffers into a single

buffer with a depth check, the code heavily use Intel x86 / x86-64

AVX2

instructions which works on 256 bits data, in my case it is able to

process 8 pixels at a time "branchless" which greatly enhance the

performance of my software renderer.

The idea was to replace this compositing loop (depth buffer is

a series of float values and i/o buffer are a series of bytes)

:

int di = 0;

for (int i = 0; i < len; i += bpp) {

float depth = input_depth_buffer[di];

if (depth > output_depth_buffer[di]) { //

compare z value

output_buffer[i+0] =

input_buffer[i+0];

output_buffer[i+1] =

input_buffer[i+1];

output_buffer[i+2] =

input_buffer[i+2];

if (bpp == 4) {

output_buffer[i+3] = input_buffer[i+3];

}

output_depth_buffer[di]

= depth; // copy depth

}

di += 1;

}

by an optimized version which works on 8 pixels at a time, my

idea was to use AVX2 compare instruction (vcmpps)

which produce a mask and give that mask to a data output

instruction (vpmaskmovd)

which is able to output selectively based on the mask

value.

My buffers can be 32-bit or 24-bit so i needed to

make the code works for both type of data, the 32-bit code took me

an evening to figure out, the 24-bit version took two days and

proved to be harder because some AVX2 instructions are limited such

as

vpshufb and there is no mask store instructions for 8 bits

data, all of this were added in AVX-512 alas my CPU (i7

7600) doesn't handle that kind of instruction

set.

32-bit (4 bpp; RGBA) C code

Here is the 4 bpp version using

Intel Intrinsics :

// to use Intel Intrinsics with GCC :

#include <x86intrin.h>

const int vec_step = 8;

const int loop_step = bpp * vec_step;

const __m256i mask_24bpp = _mm256_set_epi8(-1, -1, -1, -1, -1, -1,

-1, -1, 28, 28, 28, 24, 24, 24, 20, 20, 20, 16, 16, 16, 12, 12, 12,

8, 8, 8, 4, 4, 4, 0, 0, 0);

for (int i = 0; i < len; i += loop_step) {

// compare z value

__m256 ymm0 = _mm256_loadu_ps((const mfloat_t

*)input_depth_buffer);

__m256 ymm1 = _mm256_loadu_ps((const mfloat_t

*)output_depth_buffer);

__m256 ymm2 = _mm256_cmp_ps(ymm0, ymm1,

_CMP_GT_OS);

// copy input buffer following mask value

__m256i ymm3 = _mm256_loadu_si256((const

__m256i_u *)input_buffer);

_mm256_maskstore_epi32((int *)output_buffer,

_mm256_castps_si256(ymm2), ymm3);

// copy depth following mask value

_mm256_maskstore_ps(output_depth_buffer,

_mm256_castps_si256(ymm2), ymm0);

input_depth_buffer += vec_step;

output_depth_buffer += vec_step;

input_buffer += loop_step;

output_buffer += loop_step;

}

This version is a straightforward execution of the idea above,

it works pretty well because the instructions maps well with 32-bit

data.

24-bit (3 bpp; RGB) C code

Here is a working 3 bpp version :

// _mm256_shuffle_epi8 but shuffle

across lanes

// source :

https://github.com/clausecker/24puzzle/blob/master/transposition.c#L111C1-L124C2

static __m256i avxShuffle(__m256i p, __m256i q) {

__m256i fifteen = _mm256_set1_epi8(15), sixteen

= _mm256_set1_epi8(16);

__m256i plo, phi, qlo, qhi;

plo = _mm256_permute2x128_si256(p, p, 0x00);

phi = _mm256_permute2x128_si256(p, p, 0x11);

qlo = _mm256_or_si256(q, _mm256_cmpgt_epi8(q,

fifteen));

qhi = _mm256_sub_epi8(q, sixteen);

return (_mm256_or_si256(_mm256_shuffle_epi8(plo,

qlo), _mm256_shuffle_epi8(phi, qhi)));

}

const int vec_step = 8;

const int loop_step = bpp * vec_step;

const __m256i mask_24bpp = _mm256_set_epi8(-1, -1, -1, -1, -1, -1,

-1, -1, 28, 28, 28, 24, 24, 24, 20, 20, 20, 16, 16, 16, 12, 12, 12,

8, 8, 8, 4, 4, 4, 0, 0, 0);

for (int i = 0; i < len; i += loop_step) {

// compare z value

__m256 ymm0 = _mm256_loadu_ps((const float

*)input_depth_buffer);

__m256 ymm1 = _mm256_loadu_ps((const float

*)output_depth_buffer);

__m256 ymm2 = _mm256_cmp_ps(ymm0, ymm1,

_CMP_GT_OS);

__m256i ymm11 =

avxShuffle(_mm256_castps_si256(ymm2), mask_24bpp);

// copy input buffer following mask value

__m256i ymm3 = _mm256_loadu_si256((const

__m256i_u *)input_buffer);

__m256i ymm12 = _mm256_loadu_si256((const

__m256i_u *)output_buffer);

__m256i ymm13 = _mm256_blendv_epi8(ymm12, ymm3,

ymm11);

_mm256_storeu_si256((__m256i_u *)output_buffer,

ymm13);

// copy depth

_mm256_maskstore_ps(output_depth_buffer,

_mm256_castps_si256(ymm2), ymm0);

input_depth_buffer += vec_step;

output_depth_buffer += vec_step;

input_buffer += loop_step;

output_buffer += loop_step;

}It is slightly more complex than the 32-bit version and took

me a while to figure out, maybe there is a simpler variant but it

works and doesn't add that much.

The added complexity is explainable due to the lack

of 8 bits mask store and that the depth mask data has 8x32-bit

values;

vpmaskmovd cannot be used because the input data is 24-bit

and the mask data is 32-bit data.

The lack of appropriate mask store was solved easily

at the cost of a bit more instructions using

vpblendvb which works at byte level, this required to add

two

vmovdqu instruction as well for the vpblendvb and to

output the result.

vpshufb issue

My first tentative to solve the depth mask type issue was to

use the

vpshufb instruction to reorder the depth mask to get

8x24-bit values instead with the rest filled with 0 (-1 in the

mask), this produced plenty visual artifacts, the reason was that

the vpshufb instruction works within 128-bit lanes, a part

of the result was wrong so this instruction could not be

used.

The solution was to use a couple more instructions to get out

of the 128-bit lanes limitation which is what the avxShuffle

function does (it replaced vpshufb), this function is from 24puzzle

repository which i found out from a stack overflow post about

the bpp conversion issue.

Final C code (3 bpp and 4 bpp + handle arbitrary buffers length)

Note : The depth buffer contains float data, AVX2 instructions

must be adapted for other type of data.

Code above is restricted to buffer size divisible by 24 or 32

depending on the bpp but this version handle arbitrary buffers

length.

#if (defined(__x86_64__) ||

defined(__i386__))

const int float_type = sizeof(float) / 4;

const int vec_step = (3 - float_type) * 4;

const int loop_step = bpp * vec_step;

const int loop_rest = buffer_size %

loop_step;

const int loop_len = buffer_size -

loop_rest;

const __m256i mask_24bpp = _mm256_set_epi8(-1,

-1, -1, -1, -1, -1, -1, -1, 28, 28, 28, 24, 24, 24, 20, 20, 20, 16,

16, 16, 12, 12, 12, 8, 8, 8, 4, 4, 4, 0, 0, 0);

for (int i = 0; i < loop_len; i += loop_step)

{

// compare z value

__m256 ymm0 =

_mm256_loadu_ps((const mfloat_t *)input_depth_buffer);

__m256 ymm1 =

_mm256_loadu_ps((const mfloat_t *)output_depth_buffer);

__m256 ymm2 =

_mm256_cmp_ps(ymm0, ymm1, _CMP_GT_OS);

#if RY_BPP == 4

// copy render buffer

following mask value

__m256i ymm3 =

_mm256_loadu_si256((const __m256i_u *)input_buffer);

_mm256_maskstore_epi32((int *)output_buffer,

_mm256_castps_si256(ymm2), ymm3);

#else

__m256i ymm11 =

avxShuffle(_mm256_castps_si256(ymm2), mask_24bpp);

// copy render buffer

following mask value

__m256i ymm3 =

_mm256_loadu_si256((const __m256i_u *)input_buffer);

__m256i ymm12 =

_mm256_loadu_si256((const __m256i_u *)output_buffer);

__m256i ymm13 =

_mm256_blendv_epi8(ymm12, ymm3, ymm11);

_mm256_storeu_si256((__m256i_u *)output_buffer, ymm13);

#endif

// copy depth

_mm256_maskstore_ps(output_depth_buffer, _mm256_castps_si256(ymm2),

ymm0);

input_depth_buffer +=

vec_step;

output_depth_buffer +=

vec_step;

input_buffer +=

loop_step;

output_buffer +=

loop_step;

}

// handle arbitrary buffers length; copy rest in

case length is not divisible by 24 or 32

int di = 0;

for (int i = 0; i < loop_rest; i += bpp)

{

float depth =

input_depth_buffer[di];

if (depth >

output_depth_buffer[di]) {

output_buffer[i+0] = input_buffer[i+0];

output_buffer[i+1] = input_buffer[i+1];

output_buffer[i+2] = input_buffer[i+2];

if (bpp == 4) {

output_buffer[i+3] = input_buffer[i+3];

}

output_depth_buffer[di] = depth;

}

di += 1;

}

#endif32-bit (RGBA) to 24-bpp (RGB)

The 24 bpp mask above must be adapted if used for bitmap data,

the reason is that the mask above is similar to a grayscale

reordering in the context of bitmap data.

Here is the mask to be used with avxShuffle for bitmap

data :

const __m256i mask_24bpp =

_mm256_set_epi8(-1, -1, -1, -1, -1, -1, -1, -1, 30, 29, 28, 26, 25,

24, 22, 21, 20, 18, 17, 16, 14, 13, 12, 10, 9, 8, 6, 5, 4, 2, 1,

0);Conclusion

This was a fun foray on AVX2 optimization, as far as i know

there is not much resources for this sort of conversion available

currently (only hints to what the avxShuffle function does

and perhaps code in some repos), it works pretty well for both 4

bpp and 3 bpp and helped to increase the frames per second of my

software renderer.

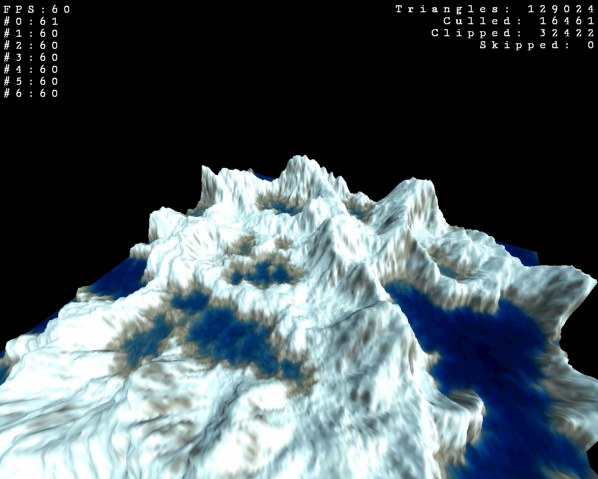

7 CPU cores render this scene; 7 render buffers

are composited (with depth buffer) in this screenshot

back to top